Published on Medium by Alexandra Furman

At Stylight, we’ve recently been dealing with how to make our user research process more extensible. This came about after our second user researcher, Alex, came on board. The days of stretching myself between product teams, getting scrappy pulling together research rounds, and maintaining structure for my own organizational purposes were finally coming to an end.

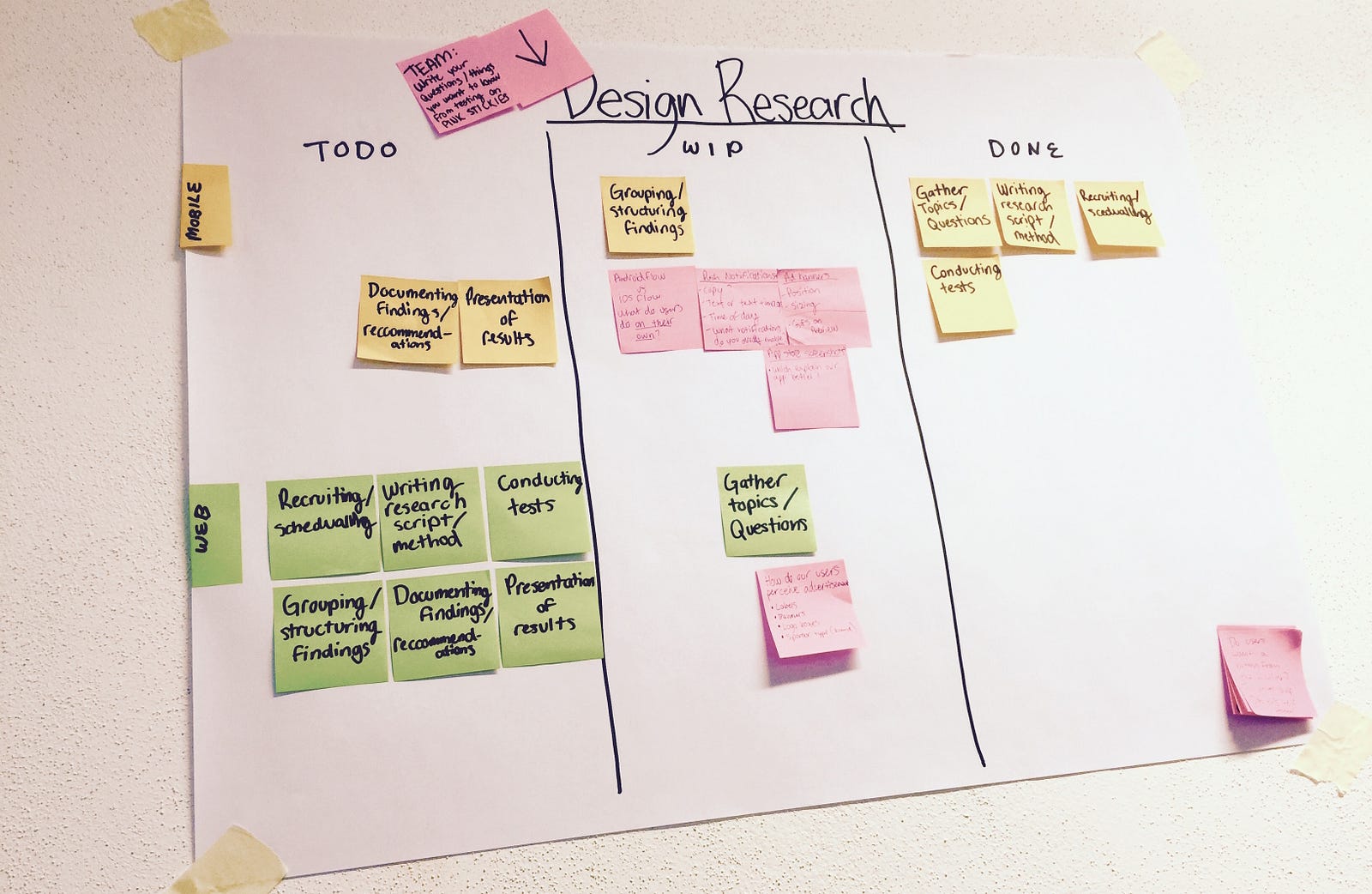

Alex jumped into things by orienting herself with our web team, while I continued to focus primarily on the apps. Generally speaking, we do aim to keep ourselves interchangeable between both product teams. We decided in the first days that we would attend the daily standups of the two teams, and should both be informed enough to conduct research for the website as well as the mobile apps.

Expanding our research crew had been an extremely positive push forward for UX at Stylight, but it also came with a brutal realization. I needed to take a serious look at how my research techniques for collection and communication would transfer to new Stylight researchers, as well as how we could collectively bring our knowledge to the table in order to establish a collaborative, consistent user research culture.

MY WORKFLOW IN A NUTSHELL

As it stands, each research round begins with me writing a test plan. I then start recruiting participants, whether it be doing so using my own recruiting contact list, or sending a screener to a recruiting agency and going through the process with them. We’re currently testing females only, as our target demographic is millennial women. As I wait for replies and schedule girls in, I begin to write up a research script with questions I’d like to ask each participant.

The topics we come up with f0r testing are based on grey areas surrounding new features we’ve recently designed, unanswered questions we have after the implementation of a solution, or questions we have about the motivations of our users. It’s not only me as the researcher coming up with the questions, in fact it’s usually the case that they come from the team. I find that the best queries and insights arise when our developers, designers, and product owner are curious about something. We run frequent A/B tests to evaluate the success of things we’ve implemented, especially on Android, but it’s often the case that the numbers are too close. That or the results are insignificant, or there’s simply not enough of an explanation as to why users are behaving a certain way. Sometimes our questions are a follow up to these A/B tests, to understand why something went the way it did, and get a better idea of how to move forward.

After the test has been conducted in whatever form it may be, I spend a day synthesizing my research by clustering similar user issues that have come up in the test. Last and most importantly, I pull together a report and make a presentation/workshop to communicate my findings to the team.

MEANS AND METHOD

As the lone user researcher at Stylight (and the second ever), I kind of had to immerse myself in the research process I felt personally most comfortable pulling insights via. I’m not necessarily in my element slanging free coffees around town, chatting with strangers, and bringing in feedback through regular guerilla testing. I find that I’m able to pull more from each participant if I have them in a chill environment, can offer them a bit of a better incentive, and have my hour planned out with a few different research techniques. As it currently stands, I’m most often conducting in-house usability tests, doing the odd bit of guerilla testing, and running a diary study once in a while.

The in-house usability tests I conduct usually consist of five participants per study, who come into the office to participate in an hour long session. These in-house testing sessions vary in method from round to round. Usually each session has a pre-evaluation interview where we go over shopping/fashion inspiration habits, and a post evaluation interview where we reflect on the overall impression of the app. What comes after the pre-evaluation and before the post evaluation interviews is another super flexible part of our research. Sometimes we will go for a more standard usability test, with given tasks, where the participant is asked to speak aloud about what they’re seeing and doing. Other times we’ll mix in desirability evaluations with card sorting if what we’re testing is more visual or we’re parallel testing designs in prototypes. Often we’ll get participants to evaluate the interface or that of our competitors using the SUS questionnairedesigned by Digital Equipment Corporation, which provides an easy score of the system’s perceived usability. This is great if we want to do a quick and dirty benchmark of one of our interfaces against a previous version of it, another interface of ours (the mobile vs desktop website for example), or the interface of a competitor when testing any given feature/function.

More recently I’ve been experimenting with remote usability testing, in order to have participants test our apps in more natural environment. We do the remote testing by having people take part in a three step “usability week”. The first part involves an orientation meeting where the girls drop by our office, sign a participation form, and we install a Lookback loaded version of our iOS app on their phones. Lookback is a fairly new service that records taps, touches, and video recordings each time a user opens your product for a session. The second part involves the participants doing at home sessions for 5 days. During these 5 days we ask them to use the app at least once a day for 5 days, for as long as they’d like. Each mini session is automatically uploaded to our dashboard on the Lookback website, and we ask participants to follow up each session by sending me a quick Whatsapp message. This Whatsapp message should explain what went right, what went wrong, and their overall experience during the session. The third part is the post evaluation follow up, where girls are asked to complete a quick survey to reflect on the week, and their impression of the Stylight app. This remote testing method eliminates a bit of the participant’s attentional bias, allows participants to develop a more contextual impression of the product, and really helps us understand the various use cases of our products.

ADDING A DASH OF CONSISTENCY

So, back to the research structure — what was there to design then? I figured I had my research methods and personal process down pat, I was collecting valuable insights, and I was delivering informed recommendations for the team that were yielding positive results. However, the overall process needed to be cohesive enough for both user researchers to have consistent research documentation internally, and a similar delivery method f0r bringing findings to the product teams.

We set out to establish order. This meant reorganizing our filing and reporting, as well as meticulously organizing our shared google drive folder. This also meant making the delivery of insights more consistent (graphic design and structure), and agreeing that each round of research would end with a 90 minute presentation/workshop for the team. The biggest revelation here was agreeing that the research methods in between would be a project based personal preference.

A preference, in that we need to call our own shots on the appropriate method. The way we collect insights is completely dependant on the questions we want to answer. Sometimes we have one feature that needs a quick check and we need to run out the door for some guerilla testing. Sometimes I feel that participants give more thorough answers to pointed questions over Whatsapp after a session. Sometimes we have bigger questions about the overall user journey and their intentions, and need to watch how they use our app over the course of a week.

We’ve now got the ground laid for a happy, healthy, productive research culture to develop as Stylight continues to grow. If Alex, our other user researcher, wants to read through my test plan or report to ensure that we aren’t duplicating research, she is able to do so and knows exactly where to find it. If she happens to be ill on test day, I’m able to pick up her script, understand what she’s testing and fill in for her. In terms of delivering information to the team, we now have a consistent presentation/workshop format, so the teams can get used to receiving insights from us and participating in discussions about how to move our products forward. Since this additional structure has been put into place, our teammates have seen more consistency in how we involve them in solution seeking, and inform them of recommendations. An equally as important feat, Alex and I can now focus on learning, exchanging and evolving completely new ways of getting to know our users in that creative zone where the methods live.